HARVARD CONUNDRUM [via NYT and New Yorker, vs Her Claim in Linkedin and Private Website]

this writing mixed from Zoé Ziani, Ph.D. side, Professor Francesca side, NYT side, and New Yorker side

NYC 8.02am

Exhibit of how elitism and hierarchy hurt the profession. "How dare you a lowly graduate student challenge a Harvard professor." Most papers fail to replicate. Some have magic parameters that the author pulled out of their hat. Very few generalize. A long-overdue cleanup is happening. Whistle blowers need to be protected.

All human relationships are built on trust. Honesty is a must: once the trust is gone, it's gone for good. The main victim is society at large, which incurs costs of negative innovation and adopting false beliefs when scientific advances are supposed to bring about innovation and adoption of correct beliefs. At this point its about time we have more open conversations around grad student abuse in academia by advisors. Going as far as to name names with specific instances of mistreatment. This is a toxic culture at its core. Any proven data fraud offenders should serve jail time. Each such case is millions of wasted taxpayers’ dollars and hundreds of ruined careers of very bright researchers. One of the most disgusting things one can do to society.

Perhaps academics can sign a "Pledge of Ownership" to replace the infamous "Pledge of Honesty"? For example by Professor Michael Ewens "I hereby own all mistakes in my published work and never blame my RAs, whose names I will always remember."

Data fraud is often framed as a victimless crime--or else the perpetrators frame themselves as the victim. data fraud can seem like a victimless crime - but to the graduate students as you mentioned it is not. It creates a fog around ALL research now. Can we trust that any study isn't faked?

A cost of scientific fraud: it creates the expectation that experiments should give uncomplicated, intuitive results, and thereby encourages more misleading or even fraudulent science. That makes it a dangerous vicious cycle, a bit like corruption. The other common victim is the reputation of a field as a whole. Diffuse costs, but they exist nonetheless.

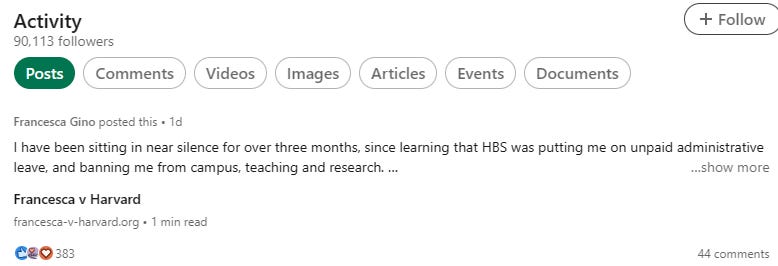

But the true victims are often graduate students who struggle to replicate a prominent study, face professional ostracism, and their only recourse is to leave the field or become a whistleblower. The New Yorker and NYT has a fantastic INVESTIGATION revealing how the fraud from Dan Ariely and Francesa Gino's research was originally identified by Zoé Ziani, Ph.D., JUST 2-3 hours before another latest claim by Francesca in her Linkedin.

Just around 5 hours after latest post in Linkedin, Zoe Ziani, Ph.D. giving a new response =

The day almost two years ago when Harvard Business School informed Francesca Gino, a prominent professor, that she was being investigated for data fraud also happened to be her husband’s 50th birthday. An administrator instructed her to turn in any Harvard-issued computer equipment that she had by 5 p.m. She canceled the birthday celebration she had planned and walked the machines to campus, where a University Police officer oversaw the transfer. Really is quite an analogy, and makes think of being encouraged to attend cocktail mixers at conferences to network. Maybe a little too used to wining and dining. It should particularly horrify any consumer of science news and science reporting-- a group which includes a lot of science fiction writers.

“We ended up both going,” Dr. Gino recalled. “I couldn’t go on my own because I felt like, I don’t know, the earth was opening up under my feet for reasons that I couldn’t understand.”

Everyone in academia or industry that makes decisions based on the fraudulent data is a victim. If a company lies on its financial statements it hurts the investors that made financial decisions based on those lies. The same applies to lying in your research papers.

The school told Dr. Gino it had received allegations that she manipulated data in four papers on topics in behavioral science, which straddles fields like psychology, marketing and economics.

Dr. Gino published the four papers under scrutiny from 2012 to 2020, and fellow academics had cited one of them more than 500 times. The paper found that asking people to attest to their truthfulness at the top of a tax or insurance form, rather than at the bottom, made their responses more accurate because it supposedly activated their ethical instincts before they provided information.

In the past few years, eminent behavioral scientists have come to regret their participation in the fantasy that kitschy modifications of individual behavior will repair the world. George Loewenstein, a titan of behavioral science has refashioned his research program conceding that his own work might have contributed to an emphasis on the individual at the expense of the systemic. 'This is the stuff that C.E.O.s love, right?' Luigi Zingales, an economist at the University of Chicago, 'It’s cutesy, it’s not really touching their power, and pretends to do the right thing.' At the end of Simmons’s unpublished post, he writes, 'An influential portion of our literature is effectively a made-up story of human-like creatures who are so malleable that virtually any intervention administered. at one point in time can drastically change their behavior.' He adds that a 'field cannot reward truth if it does not or cannot decipher it, so it rewards other things instead. Interestingness. Novelty. Speed. Impact. Fantasy.

The Data Colada guys have always believed that the replication crisis might be better understood as a 'credibility revolution' in which their colleagues would ultimately choose rigor. The end result might be a field that’s at once more boring and more reputable. Any 'academic' who says you cant question a published paper, or another academic, should be ashamed and shunned from the field. The entire premise of science and research is question everything.

Though she did not know it at the time, Harvard had been alerted to the evidence of fraud a few months earlier by three other behavioral scientists who publish a blog called Data Colada, which focuses on the validity of social science research. The bloggers said it appeared that Dr. Gino had tampered with data to make her studies appear more impressive than they were. In some cases, they said, someone had moved numbers around in a spreadsheet so that they better aligned with her hypothesis. In another paper, data points appeared to have been altered to exaggerate the finding.

Their tip set in motion an investigation that, roughly two years later, would lead Harvard to place Dr. Gino on unpaid leave and seek to revoke her tenure — a rare step akin to career death for an academic. It has prompted her to file a defamation lawsuit against the school and the bloggers, in which she is seeking at least $25 million, and has stirred up a debate among her Harvard colleagues over whether she has received due process.

Harvard said it “vehemently denies” Dr. Gino’s allegations, and a lawyer for the bloggers called the lawsuit “a direct attack on academic inquiry.”

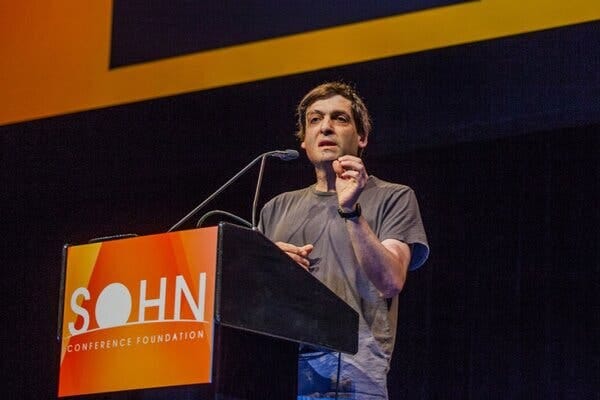

Prof. Francesca Gino speaking at an executive education event at Harvard Business School.

Credit = Brooks Kraft/Harvard University

Perhaps most significant, the accusations against Dr. Gino inflamed a long-simmering crisis within the field.

Many behavioral scientists believe that, once we better understand how humans make decisions, we can find relatively simple techniques to, say, help them lose weight (by moving healthy foods closer to the front of a buffet) or become more generous (automatically enrolling people in organ donor programs).

The field enjoyed a heyday in the first decade of the 2000s, when it spawned a ream of airport best-sellers and viral blog posts, and a leading figure bagged a Nobel Prize. But it has been fending off credibility questions for almost as long as it has been spinning off TED Talks. In recent years, scholars have struggled to reproduce a number of these findings, or discovered that the impact of these techniques was smaller than advertised.

Fraud, though, is something else entirely. Dozens of Dr. Gino’s co-authors are now scrambling to re-examine papers they wrote with her. Dan Ariely, one of the best-known figures in behavioral science and a frequent co-author of Dr. Gino’s, also stands accused of fabrication in at least one paper.

Though the evidence against Dr. Gino, 45, appears compelling, it remains circumstantial, and she denies having committed fraud, as does Dr. Ariely. Even the bloggers, who published a four-part series laying out their case in June and a follow-up this month, have acknowledged that there is no smoking gun proving it was Dr. Gino herself who falsified data.

That has left colleagues, friends, former students and, well, armchair behavioral scientists to sift through her life in search of evidence that might explain what happened. Was it all a misunderstanding? A case of sloppy research assistants or rogue survey respondents?

Or had we seen the darker side of human nature — a subject Dr. Gino has studied at length — poking through a meticulously fashioned facade?

During more than five hours of conversation with Dr. Gino, she was proud of her accomplishments, at times defiant toward her accusers and occasionally empathetic to those who, she said, mistakenly believed the evidence of fraud.

“I don’t blame readers of the blog for coming to that conclusion,” she said, adding, “But it’s important to know there are other explanations.”

Dr. Gino was something of an academic late bloomer. After growing up in Tione di Trento, a small town in Italy, she earned a Ph.D. in economics and management from an Italian university in 2004, then did a postdoctoral fellowship at Harvard Business School. But she did not receive a single tenure-track offer in the United States after completing her fellowship.

She seemed to romanticize American academic life and worried that she would have to settle for a consulting job or university post in Italy, where she had a lead.

“I have a vivid memory of being in an airport somewhere in Europe — I think in Frankfurt — in tears,” she recalled.

The job she eventually landed, a two-year position as a visiting professor at Carnegie Mellon University, arose when a Harvard mentor lobbied a former student on the faculty there to give her a chance.

In conversation, Dr. Gino can come across as formal. The slight stiltedness of her nonnative English merges with the circumlocution of business-school lingo to produce phrases like “the most important aspect is to embrace a learning mind-set” and “I believe we’re going to move forward in a positive way.”

But she also exhibits a certain steeliness. “I am a well-organized person — I get things done,” she told me at one point. She added: “It can take forever to publish papers. What’s in my control, I execute at my pace, my rigor.”

Dr. Gino distinguished herself at Carnegie Mellon with a ferocious appetite for work. “She thrived on and put more pressure on herself than anyone would have,” said Sam Swift, a graduate student in the same group. Shortly after starting, Dr. Gino dusted off a project that had stalled out and, within weeks, had whipped up an entire draft of a paper that was later accepted for publication.

After Carnegie Mellon, she took a position in 2008 as an assistant professor at the University of North Carolina — a respectable landing spot, to be sure, but not one regarded as a major hub for behavioral research. Soon, however, a series of projects she had started years earlier began appearing in journals, often with high-profile co-authors. The volume of publications she notched in a short period was turning her into an academic star.

Among those co-authors was Dr. Ariely, who moved from the Massachusetts Institute of Technology to Duke around the same time Dr. Gino arrived at North Carolina. Dr. Ariely entered the public consciousness early the same year with the publication of his best-selling book, “Predictably Irrational: The Hidden Forces That Shape Our Decisions.”

Image

Dr. Gino and Dan Ariely wrote more than 10 papers together on topics like dishonesty.

Credit...

Chris Goodney/Bloomberg

The book helped introduce mainstream audiences to the quirks of human reasoning that economists traditionally ignored because they assumed people act in their self-interest. Behavioral science seemed to offer easy fixes for nonrational acts, such as our tendency to save too little or put off medical visits. It rode a wave of popular interest in social science, which had made hits of recent books like “The Tipping Point,” by the journalist Malcolm Gladwell, and “Freakonomics,” by the economist Steven Levitt and the journalist Stephen Dubner.

Dr. Gino and Dr. Ariely became frequent co-authors, writing more than 10 papers together over the next six years. The particular academic interest they shared was a relatively new one for Dr. Gino: dishonesty.

While the papers she wrote with Dr. Ariely were only a portion of her prodigious output, many made a splash. One found that people tend to emulate cheating by other members of their social group — that cheating can, in effect, be contagious — and another posited that creative people tend to be more dishonest. In all, four of her six most cited papers were written with Dr. Ariely, out of more than 100.

Dr. Gino seemed to value the relationship. “She talked about him a lot,” said Tina Juillerat, a graduate student who worked with Dr. Gino at the university. “She really seemed to admire Ariely.”

In our conversations, Dr. Gino seemed eager to minimize the relationship. She said she did not consider Dr. Ariely a mentor and had frequently worked with his students and postdocs rather than with him directly. (Dr. Ariely said that “for many years, Dr. Gino was a friend and collaborator.”)

Dr. Ariely is famous among colleagues and students for his impatience with what he regards as pointless rules, which they say he grudgingly abides by; Dr. Gino comes off as something of a stickler. But they seemed to share an ambition: to show the power of small interventions to elicit surprising changes in behavior: Counting to 10 before choosing what to eat can help people select healthier options (Dr. Gino); asking people to recall the Ten Commandments before a test encourages them to report their results more honestly (Dr. Ariely).

By 2009, Dr. Gino had begun to feel isolated in North Carolina and let it be known that she wanted to relocate. This time, it was the schools that seemed desperate to land her, rather than vice versa. A number of competitors recruited her, but she eventually accepted an offer from Harvard.

Within a few years, Dr. Gino had tenure and a team of students and researchers who could run experiments, analyze the data and write the papers, which she helped conceive and edit. The arrangement, which is common among tenured faculty members, allowed her to leverage herself more effectively. She was pulled into the jet stream of talks and NPR cameos and consulting projects.

In 2018, she published her own mass-market book, “Rebel Talent: Why It Pays to Break the Rules at Work and in Life.” “Rebels are people who break rules that should be broken,” Dr. Gino told NPR, summarizing her thesis. “It creates positive change,” she added.

It’s often difficult to identify the moment when an intellectual movement jumps the shark and becomes an intellectual fad — or, worse, self-parody.

But in behavioral science, many scholars point to an article published in a mainstream psychology journal in 2011 claiming evidence of precognition — that is, the ability to sense the future. In one experiment, the paper’s author, an emeritus professor at Cornell, found that more than half the time participants correctly guessed where an erotic picture would show up on a computer screen before it appeared. He referred to the approach as “time-reversing” certain psychological effects.

The paper used methods that were common in the field at the time, like relying on relatively small samples. Increasingly, those methods looked like they were capturing statistical flukes, not reality.

“If some people have ESP, why don’t they go to Las Vegas and become rich?” Colin Camerer, a behavioral economist at the California Institute of Technology, told me. (Behavioral economists root their work in economic concepts like incentives as well as insights from psychology; the line between them and behavioral scientists can be blurry.)

In her 2018 book, “Rebel Talent: Why It Pays to Break the Rules at Work and in Life,” Dr. Gino writes that rule-breaking can be constructive.

Credit = Amir Hamja/The New York Times

Few scholars were more affronted by the turn their discipline was taking than Uri Simonsohn and Joseph Simmons, who were then at the University of Pennsylvania, and Leif Nelson of the University of California, Berkeley.

The three behavioral scientists soon wrote an influential 2011 paper showing how certain long-tolerated practices in their field, like cutting off a five-day study after three days if the data looked promising, could lead to a rash of false results. (As a matter of probability, the first three days could have lucky draws.) The paper shed light on why many scholars were having so much trouble replicating their colleagues’ findings, including some of their own.

Two years later, the three men launched their blog, Data Colada, with this tagline below a logo of an umbrella-topped cocktail glass: “Thinking about evidence, and vice versa.” The site became a hub for nerdy discussions of statistical methods — and, before long, various research crimes and misdemeanors.

Dr. Gino and Dr. Ariely have always kept their focus firmly within the space-time continuum. Still, they sometimes produced work that raised eyebrows, if not fraud accusations, among other scholars. In 2010, they and a third colleague published a paper that found that people cheated more when they wore counterfeit designer sunglasses.

“We suggest that a product’s lack of authenticity may cause its owners to feel less authentic themselves,” they concluded, “and that these feelings then cause them to behave dishonestly.”

This genre of study, loosely known as “priming,” goes back decades. The original, modest version is ironclad: A researcher shows a subject a picture of a cat, and the subject becomes much more likely to fill in the missing letter in D_G with an “O” to spell “DOG,” rather than, say, DIG or DUG.

But in recent decades, the priming approach has migrated from word associations to changes in more complex behaviors, like telling the truth — and many scientists have grown skeptical of it. That includes the Nobel laureate Daniel Kahneman, one of the pioneers of behavioral economics, who has said the effects of so-called social priming “cannot be as large and as robust” as he once assumed.

Dr. Gino said her work in this vein had followed accepted practices at the time; Dr. Ariely said findings could be sensitive to experimental conditions, such as how closely participants read instructions.

Other subtle cues purporting to pack a big punch have come in for similar scrutiny in recent years. Another Harvard Business professor, Amy Cuddy, who had become a get-ahead guru beloved by Sheryl Sandberg and Cosmopolitan magazine, resigned in 2017 after criticism by Data Colada and other sites of a widely discussed paper on how so-called power poses — like standing with your legs spread out — could boost testosterone and lower stress.

In 2021, the Data Colada bloggers, citing the help of a team of researchers who chose to remain anonymous, posted evidence that a field experiment overseen by Dr. Ariely relied on fabricated data, which he denied. The experiment, which appeared in a paper co-written by Dr. Gino and three other colleagues, found that asking people to sign at the top of an insurance form, before they filled it out, improved the accuracy of the information they provided.

Dr. Gino posted a statement thanking the bloggers for unearthing “serious anomalies,” which she said “takes talent and courage and vastly improves our research field.”

Around the same time, the bloggers alerted Harvard to the suspicious data points in four of her own papers, including her portion of the same sign-at-the-top paper that led to questions about Dr. Ariely’s work.

The allegations prompted the investigation that culminated with her suspension from Harvard this June. Not long after, the bloggers publicly revealed their evidence: In the sign-at-the-top paper, a digital record in an Excel file posted by Dr. Gino indicated that data points were moved from one row to another in a way that reinforced the study’s result.

Dr. Gino now saw the blog in more sinister terms. She has cited examples of how Excel’s digital record is not a reliable guide to how data may have been moved.

“What I’ve learned is that it’s super risky to jump to conclusions without the complete evidence,” she told me.

Dr. Gino’s life these days is isolated. She lost access to her work email. A second mass-market book, which was to be published in February, has been pushed back. One of her children attends a day care on the campus of Harvard Business School, from which she has been barred.

“I used to do the pickups and drop-offs, and now I don’t,” she told me. “And the few times where I’m the one going, I feel this sense of great sadness,” she said. “What if I run into a colleague and now they report me to the dean’s office that somehow I’m on campus?”

She spends much of her time laboring over responses to the accusations, which can be hard to refute.

Harvard Business School has suspended Dr. Gino without pay and is seeking to revoke her tenure.

Credit = David Degner for The New York Times

In a paper concluding that people have a greater desire for cleansing products when they feel inauthentic, the bloggers flagged 20 strange responses to a survey that Dr. Gino had conducted. In each case, the respondents listed their class year as “Harvard” rather than something more intuitive, like “sophomore.”

Though the “Harvard” respondents were only a small fraction of the nearly 500 responses in the survey, they suspiciously reinforced the study’s hypothesis.

Dr. Gino has argued that most of the suspicious responses were the work of a scammer who filled out her survey for the $10 gift cards she offered participants — the responses came in rapid succession, and from suspicious I.P. addresses.

But it’s strange that the scammer’s responses would line up so neatly with the findings of her paper. When I pointed out that she or someone else in her lab could be the scammer, she was unbowed.

“I appreciate that you’re being a skeptic,” she told me, “since I think I’m going to be more successful in proving my innocence if I hear all the possible questions that show up in the mind of a skeptic.”

More damningly, the bloggers recently posted evidence, culled from retraction notices that Harvard sent to journals where Dr. Gino’s disputed articles appeared, indicating that much more of the data collected for these studies was tampered with than they initially documented.

In one study, forensic consultants hired by Harvard wrote, more than half the responses “contained entries that were modified without apparent cause,” not just the handful that the bloggers initially flagged.

Dr. Gino said it wasn’t possible for Harvard’s forensics consultants to conclude that she had committed fraud in that instance because the consultants couldn’t examine the original data, which was collected on paper and no longer exists.

Notwithstanding the evidence, the manner in which Harvard investigated her could ensure that the case remains officially unresolved for years. Dr. Gino’s lawsuit, which she filed in August, claims that the Data Colada bloggers offered to delay posting the evidence of fraud until Harvard investigated.

Harvard reacted, she claims, by creating a more aggressive policy for investigating misconduct and applied it to her case. Unlike the older version, the new policy contained rigid timetables for each phase of the investigation, like giving her 30 days to respond to an investigative report, and instructed an administrator to take custody of her research records.

The suit argues that applying the new policy breached Dr. Gino’s employment contract and constituted gender discrimination because the business school did not subject men in similar situations to the same treatment. Dr. Gino further argued that the school had disciplined her without meeting the new policy’s burden of proof, and that both Harvard and Data Colada had defamed her by indicating to others that she had committed fraud.

Brian Kenny, a spokesman for the business school, said the lawsuit did not present a complete picture of “the facts that led to the findings and recommended institutional actions.” He added: “We believe that Harvard ultimately will be vindicated.” Harvard will file a legal response in the coming weeks.

In an email to faculty in mid-August, the dean of Harvard Business School, Srikant Datar, implied that the accusations against Dr. Gino had prompted a change in policy because they were “the first formal allegations of data falsification or fabrication the school had received in many years.” He wrote that the new policy closely resembled policies at other schools at Harvard.

Even in the midst of her professional disgrace, Dr. Gino finds herself with some sympathetic colleagues, who are outraged at their employer’s treatment of a tenured professor. Five of Dr. Gino’s tenured colleagues at the business school told me that they had concerns about the process used to investigate Dr. Gino. Some found it disturbing that the school appeared to have created a policy prompted specifically by her case, and some worried that the case set a precedent allowing other freelance critics to effectively initiate investigations. (A sixth colleague told me that he was not troubled by the process and was confident in Dr. Gino’s guilt.)

Most of the faculty members requested anonymity because of the legal complications — the university’s general counsel distributed a note instructing faculty members not to discuss the case shortly after Dr. Gino filed her complaint.

Researchers accused of fraud rarely win lawsuits against their institutions or their accusers. But some experts have argued that Dr. Gino could stand better odds than most, partly because of the business school’s apparent adoption of a new policy to investigate misconduct in her case.

In October, dozens of Dr. Gino’s co-authors will disclose their early efforts to review their work with her, part of what has become known as the Many Co-Authors project. Their hope is to try to replicate many of the papers eventually.

But the credibility questions extend beyond her, and there is no similar project focusing on the work of other behavioral scientists whose results have drawn skepticism — including Dr. Ariely, who stands accused of similar misconduct, albeit in only one instance.

(Dr. Ariely indicated to The Financial Times in August that Duke was investigating him, though he remains a faculty member there and the school said it couldn’t comment. The publisher of his Ten Commandments paper said it was reviewing the article, which other scholars have struggled to replicate. Dr. Ariely said that he was unaware of the review and that he and his colleagues had recently replicated the result in a new study that was not yet public.)

In an interview, Dr. Kahneman, the Nobel Prize winner, suggested that while the efforts of scholars like the Data Colada bloggers had helped restore credibility to behavioral science, the field may be hard-pressed to recover entirely.

“When I see a surprising finding, my default is not to believe it,” he said of published papers. “Twelve years ago, my default was to believe anything that was surprising.”

half-bearded behavioral economist Dan Ariely tends to preface discussions of his work—which has inquired into the mechanisms of pain, manipulation, and lies—with a reminder that he comes by both his eccentric facial hair and his academic interests honestly. He tells a version of the story in the introduction to his breezy first book, “Predictably Irrational,” a patchwork of marketing advice and cerebral self-help. One afternoon in Israel, Ariely—an “18-year-old military trainee,” according to the Times—was nearly incinerated. “An explosion of a large magnesium flare, the kind used to illuminate battlefields at night, left 70 percent of my body covered with third-degree burns,” he writes. He spent three years in the hospital, a period that estranged him from the routine practices of everyday life. The nurses, for example, stripped his bandages all at once, as per the cliché. Ariely suspected that he might prefer a gradual removal, even if the result was a greater sum of agony. In an early psychological experiment he later conducted, he submitted this instinct to empirical review. He subsequently found that certain manipulations of an unpleasant experience might make it seem milder in hindsight. In onstage patter, he referred to a famous study in which researchers gave colonoscopy patients either a painful half-hour procedure or a painful half-hour procedure that concluded with a few additional minutes of lesser misery. The patients preferred the latter, and this provided a reliable punch line for Ariely, who liked to say that the secret was to “leave the probe in.” This was not, strictly speaking, optimal—why should we prefer the scenario with bonus pain? But all around Ariely people seemed trapped by a narrow understanding of human behavior. “If the nurses, with all their experience, misunderstood what constituted reality for the patients they cared so much about, perhaps other people similarly misunderstand the consequences of their behaviors,” he writes. “Predictably Irrational,” which was published in 2008, was an instant airport-book classic, and augured an extraordinarily successful career for Ariely as an enigmatic swami of the but-actually circuit.

Ariely was born in New York City in 1967 and grew up north of Tel Aviv; his father ran an import-export business. He studied psychology at Tel Aviv University, then returned to the United States for doctoral degrees in cognitive psychology at the University of North Carolina and in business administration at Duke. He liked to say that Daniel Kahneman, the Nobel Prize-winning Israeli American psychologist, had pointed him in this direction. In the previous twenty years, Kahneman and his partner, Amos Tversky, had pioneered the field of “judgment and decision-making,” which revealed the rational-actor model of neoclassical economics to be a convenient fiction. (The colonoscopy study that Ariely loved, for example, was Kahneman’s.) Ariely, a wily character with a vivid origin story, presented himself as the natural heir to this new science of human folly. In 1998, with his pick of choice appointments, he accepted a position at M.I.T. Despite having little training in economics, he seemed poised to help renovate the profession. “In Dan’s early days, he was the most celebrated young intellectual academic,” a senior figure in the discipline told me. “I wouldn’t say he was known for being super careful, but he had a reputation as a serious scientist, and was considered the future of the field.”

The new discipline might have lent itself to a tragic view of life. Our preferences were arbitrary and incoherent; no narrator was reliable. What differentiated Ariely was his faith that we could be managed. “It is very sad that we are fallible, myopic, vindictive, and emotional,” he told me by e-mail. “But in my view this perspective also means, and this is the optimistic side, that we can do much better.” Take, for example, cheating. If people are utility-maximizing agents, they will fleece as much as they can get away with. Ariely believed, to the contrary, that a potential cheater has to balance two conflicting desires: the urge to max out his gains and the need to see himself as a good person. In experiments, Ariely found that most people cheat when given the opportunity—but just a little. Ariely, who does not shy from cutesiness, called this the “fudge factor.” In turn, he proposed, people might just need to be reminded that they aspire to be decent. In one of his most famous experiments, he asked students to score their own math tests. Half the students had first been asked to list the Ten Commandments. Although most could recall only a few, Ariely found that, in this group, “nobody cheated.” The insight was simple, the intervention subtle, and the consequences enormous.

Ariely came to owe his reputation to his work on dishonesty. He offered commentary in documentaries on Elizabeth Holmes and pontificated about Enron. As Remy Levin, an economics professor at the University of Connecticut, told me, “People often go into this field to study their own inner demons. If you feel bad about time management, you study time inconsistency and procrastination. If you’ve had issues with fear or trauma, you study risk-taking.” Pain was an obvious place for Ariely to start. But his burn scars heightened his sensitivity to truthfulness. Shane Frederick, a professor at Yale’s business school, told me, “One of the first things Dan said to me when we met was ‘Would you ever date someone who looked like me?’ And I said, ‘No fucking way,’ which was a really offensive thing to say to someone—but it weirdly seemed to charm Dan.” From that moment, Frederick felt, Ariely was staunchly supportive of his career. At the same time, Ariely seemed to struggle with procedural norms, especially when they seemed pointless. Once, during a large conference, John Lynch, one of Ariely’s mentors, was rushed to the hospital. Ariely told me that only family members were allowed to visit. He pretended that his scarring was an allergic reaction and, once he was admitted, spent the night by Lynch’s side. In his telling, the nurse was in on the charade. “We were just going through the motions so that she could let me in,” he told me. But a business-school professor saw it differently. “Dan was seen as a hero because he had this creative solution,” she said. “But the hospital staff, even though they knew this wasn’t a real allergic reaction, weren’t allowed to not admit him. He was just wasting their time because he felt like he shouldn’t have to follow their rules.”

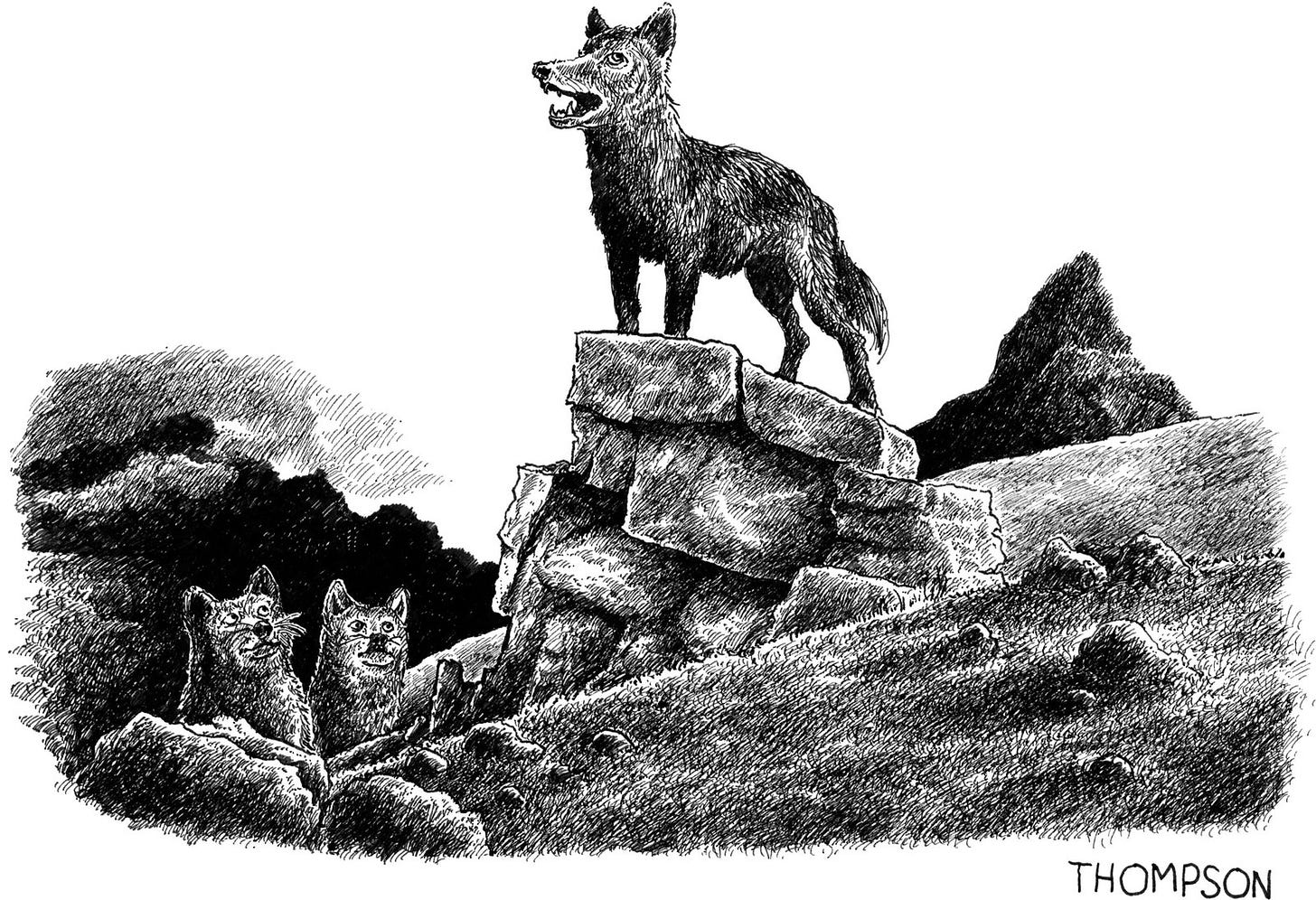

“Well, technically, I have an abrasive personality and a tendency to alienate everyone around me, but, sure, let’s go with ‘lone wolf.’ ”

Cartoon by Jake Thompson

A decade or so into his career, Ariely’s focus shifted to applied research. A former affiliate told me that Ariely once said, “Some behavioral economist is going to win the Nobel Prize—what do I have to do to be in contention?” (Ariely denies wondering whether he would get the Nobel Prize.) In the spring of 2007, he asked an insurance company if he could replace its ordinary automobile-policy review form with experimental versions of his own. Customers had an incentive to underreport their annual mileage, in order to pay lower premiums. Half the participants were to receive a form that asked them to sign an honesty declaration at the end. The other half were to receive an alternate version, which instructed them to sign a pledge at the beginning. The following year, on his first book tour, Ariely addressed a crowd at Google, where he was later contracted to advise on a behavioral-science project, and referred in passing to the experiment’s results. Those who signed at the beginning, he said, had been more candid than those who signed at the end. “This was all about decreasing the fudge factor,” he said. In 2009, Ariely noted in the Harvard Business Review that the insurance company had updated its own forms to exploit his finding. He hadn’t yet published the study, which, given its obvious importance, might have seemed peculiar. But, at the time, nothing appeared to indicate that the results weren’t trustworthy. “People who go through a tragedy like Dan, with his burn—they have an insight into what’s important in life,” the filmmaker Yael Melamede, who collaborated with Ariely on a documentary about dishonesty, told me. “He was very aware of the dangerous desire to make experiments go your way, to bend reality to your benefit.”

Despite a good deal of readily available evidence to the contrary, neoclassical economics took it for granted that humans were rational. Kahneman and Tversky found flaws in this assumption, and built a compendium of our cognitive biases. We rely disproportionately on information that is easily retrieved: a recent news article about a shark attack seems much more relevant than statistics about how rarely such attacks actually occur. Our desires are in flux—we might prefer pizza to hamburgers, and hamburgers to nachos, but nachos to pizza. We are easily led astray by irrelevant details. In one experiment, Kahneman and Tversky described a young woman who had studied philosophy and participated in anti-nuclear demonstrations, then asked a group of participants which inference was more probable: either “Linda is a bank teller” or “Linda is a bank teller and is active in the feminist movement.” More than eighty per cent chose the latter, even though it is a subset of the former. We weren’t Homo economicus; we were giddy and impatient, our thoughts hasty, our actions improvised. Economics tottered.

Behavioral economics emerged for public consumption a generation later, around the time of Ariely’s first book. Where Kahneman and Tversky held that we unconsciously trick ourselves into doing the wrong thing, behavioral economists argued that we might, by the same token, be tricked into doing the right thing. In 2008, Richard Thaler and Cass Sunstein published “Nudge,” which argued for what they called “libertarian paternalism”—the idea that small, benign alterations of our environment might lead to better outcomes. When employees were automatically enrolled in 401(k) programs, twice as many saved for retirement. This simple bureaucratic rearrangement improved a great many lives.

Thaler and Sunstein hoped that libertarian paternalism might offer “a real Third Way—one that can break through some of the least tractable debates in contemporary democracies.” Barack Obama, who hovered above base partisanship, found much to admire in the promise of technocratic tinkering. He restricted his outfit choices mostly to gray or navy suits, based on research into “ego depletion,” or the concept that one might exhaust a given day’s reservoir of decision-making energy. When, in the wake of the 2008 financial crisis, Obama was told that money “framed” as income was more likely to be spent than money framed as wealth, he enacted monthly tax deductions instead of sending out lump-sum stimulus checks. He eventually created a behavioral-sciences team in the White House. (Ariely had once found that our decisions in a restaurant are influenced by whoever orders first; it’s possible that Obama was driven by the fact that David Cameron, in the U.K., was already leaning on a “nudge unit.”)

The nudge, at its best, was modest—even a minor potential benefit at no cost pencilled out. In the Obama years, a pop-up on computers at the Department of Agriculture reminded employees that single-sided printing was a waste, and that advice reduced paper use by six per cent. But as these ideas began to intermingle with those in the adjacent field of social psychology, the reasonable notion that some small changes could have large effects at scale gave way to a vision of individual human beings as almost boundlessly pliable. Even Kahneman was convinced. He told me, “People invented things that shouldn’t have worked, and they were working, and I was enormously impressed by it.” Some of these interventions could be implemented from above. Brian Wansink, a researcher at Cornell, reported that an attractive wire rack and a lamp increased fruit sales at a school by fifty-four per cent, and that buffet diners likely consumed fewer calories when “cheesy eggs” weren’t immediately at hand. Other techniques were akin to personal mind cures. In 2010, the Harvard Business School professor Amy Cuddy purported to show that subjects who held an assertive “power pose” could measurably improve their confidence and “instantly become more powerful.” In advance of job interviews, prospective employees retreated to corporate bathrooms to extend their arms in victorious V’s.

In 2017, Thaler won the Nobel Prize for his analysis of “economic decision-making with the aid of insights from psychology.” Some policy nudges did not ultimately survive empirical scrutiny (though early studies showed that making organ donation opt-out rather than opt-in would cause the practice to become more widespread, long-term evaluations suggested that it had little effect), but the bulk of them held up. By that point, however, a maximalist version of the principle—easily absorbed by viral life-hack culture—had become commonplace. Ariely, for his part, predicted that nudges were just the beginning, and held out for more ambitious social engineering. He told me, “I thought that in many cases paternalism is going to be necessary.” At the end of “Predictably Irrational,” he writes, “If I were to distill one main lesson from the research described in this book, it is that we are pawns in a game whose forces we largely fail to comprehend.”

Haaretz once called Ariely “the busiest Israeli in the world.” I met him several times in the past year, although he agreed to speak on the record mostly in writing. A stimulating and slightly unnerving interlocutor, he has coarse black bangs, tented eyebrows, and the frank but hooded aspect of an off-duty mentalist or a veteran card-counter. “Predictably Irrational” considerably expanded his sphere of influence. He started a lab at Duke called the Center for Advanced Hindsight, which was funded by BlackRock and MetLife. He had a wife and two young children in Durham, but spent only a handful of days a month in town. In a given week, he might fly from São Paulo to Berlin to Tel Aviv. At talks, he wore rumpled polos and looked as though he’d trimmed his hair with a nail clipper in an airport-lounge rest room. He has said that he worked with multiple governments and Apple. He had ideas for how to negotiate with the Palestinians. When an interviewer asked him to list the famous names in his phone contacts, he affected humility: “Jeff Bezos, the C.E.O. of Amazon—is that good?” He went on: the C.E.O.s of Procter & Gamble and American Express, the founder of Wikipedia. In 2012, he said, he got an e-mail from Prince Andrew, who invited him to the palace for tea. Ariely’s assistant had to send him a jacket and tie via FedEx. He couldn’t bring himself, as an Israeli, to say “Your Royal Highness,” so he addressed the Prince by saying “Hey.”

Ariely seemed to know everything and everyone. “What an amazing life to lead,” a former doctoral student in his lab said. “It was like ‘The Grand Budapest Hotel.’ ” He told people that he’d climbed Annapurna and rafted down the Mekong River. But he was also attentive. “Every single time I went into the room and interacted with Dan, it was unbelievably enjoyable,” the student said. At one talk, he auctioned off a hundred-dollar bill, with the stipulation that the second-highest bidder would also have to pay. The winner owed a hundred and fifty dollars; the loser owed a hundred and forty-five dollars for nothing. Both might have felt like idiots, but Ariely wasn’t scornful; he sympathized. His knowledge of human behavior could be burdensome. “It makes daily interactions a little difficult,” he said. “I know all kinds of methods to convince people to do things I want them to do.” He told me, “Just imagine that you could separate the people who are your real friends from the people who want something from you. . . . And now ask yourself if you really want to know this about them.”

One of his frequent collaborators was Francesca Gino, a rising star in the field. Gino is in her mid-forties, with dark curly hair and a frazzled aspect. She grew up in Italy, where she pursued a doctorate in economics and management. Members of her cohort remember her dedication, industry, and commitment. She first came to Harvard Business School as a visiting fellow, and, once she completed her Ph.D., in 2004, she stayed on as a postdoc. She later said that she went to Harvard for a nine-month stint and never left. This story elides a few detours. By the end of her postdoc, in 2006, she had yet to publish an academic paper, and Harvard did not extend an offer. One of her mentors at Harvard, a professor named Max Bazerman, helped make introductions; she eventually landed a postdoc at Carnegie Mellon. A senior colleague who knew her at the time told me, “That entire experience could plausibly have left her with a keen sense of the fragility and precariousness of academic careers.” At last, she seemed to find her footing, and it soon looked as though she could get almost any study to produce results. She secured a job at U.N.C., where she entered a phase of elevated productivity. According to her C.V., she published seven journal papers in 2009; in 2011, an astonishing eleven.

Ariely and Gino frequently collaborated on dishonesty. In the paper “The Dark Side of Creativity,” they showed that “original thinkers,” who can dream up convincing justifications, tend to lie more easily. For “The Counterfeit Self,” she and Ariely had a group of women wear what they were told were fake Chloé sunglasses—the designer accessories, in an amusing control, were actually real—and then take a test. They found that participants who believed they were wearing counterfeit sunglasses cheated more than twice as much as the control group. In “Sidetracked,” Gino’s first pop-science book, she seems to note that such people were not necessarily corrupt: “Being human makes all of us vulnerable to subtle influences.” In 2010, she returned to Harvard Business School, where she was awarded an endowed professorship and later became the editor of a leading journal. She dispensed page-a-day-calendar advice on LinkedIn: “Life is an unpredictable journey. . . . The challenge isn’t just setting our path, but staying on it amidst chaos.” She was a research consultant for Disney, and a speakers bureau quoted clients between fifty and a hundred thousand dollars to book her for gigs. In 2020, she was the fifth-highest-paid employee at Harvard, earning about a million dollars that year—slightly less than the university’s president.

Gino drew admiring notice from those who could not believe her productivity. The business-school professor said, “She’s not just brilliant and successful and wealthy—she has been a kind, fun person to know. She was well liked even by researchers who were skeptical of her work.” But she drew less admiring notice, too—also from people who could not believe her productivity. As one management scholar told me, “You just cannot trust someone who is publishing ten papers a year in top journals.” Other co-authors, as collateral beneficiaries, weren’t sure what to think. One former graduate student thought that she caught Gino plagiarizing portions of a literature review, but tried to convince herself that it was an honest error. Later, in a study for a different paper, “Gino was, like, ‘I had an idea for an additional experiment that would tie everything together, and I already collected the data and wrote it up—here are the results.’ ” The former graduate student added, “My adviser was, like, ‘Did you design the study together? No. Did you know it was going to happen? No. Has she sent you the data? No. Something off is happening here.’ ” (Gino declined to address these allegations on the record.)

Cartoon by Liana Finck

In late 2010, Gino was helping to coördinate a symposium for an Academy of Management conference, on “behavioral ethics,” which listed Ariely as a contributor. At the time, Gino and Bazerman were researching moral identity. Ariely’s findings with the car-insurance company remained unpublished, but his talks had made the rounds, and his field study seemed like the perfect companion piece for joint publication. “I suggest we add them as co-authors and write up the paper for a top tier journal,” Gino later wrote, by e-mail.

The paper, which was published in 2012, became an event. Signing the honesty pledge at the beginning, Ariely found, reduced cheating by about ten per cent. The Obama Administration included the paper’s findings in an annual White House report. Government bodies in the U.K., Canada, and Guatemala initiated studies to determine whether they should revise their tax forms, and estimated that they might recoup billions of dollars a year. Kahneman told me that he saw no reason to disbelieve the results, which were clearly compatible with the orientation of the field. “But many things that might work don’t,” he told me. “And it’s not necessarily clear a priori.”

Near the end of Obama’s first term, vast swaths of overly clever behavioral science began to come unstrung. In 2011, the Cornell psychologist Daryl Bem published a journal article that ostensibly proved the existence of clairvoyance. His study participants were able to predict, with reasonable accuracy, which curtain on a computer screen hid an erotic image. The idea seemed parodic, but Bem was serious, and had arrived at his results using methodologies entirely in line with the field’s standard practices. This was troubling. The same year, three young behavioral-science professors—Joe Simmons, Leif Nelson, and Uri Simonsohn—published an actual parody: in a paper called “False-Positive Psychology,” they “proved” that listening to the Beatles song “When I’m Sixty-Four” rendered study participants literally a year and a half younger. “It was hard to think of something that was so crazy that no one would believe it, because compared to what was actually being published in our journals nothing was that crazy,” Nelson, who teaches at U.C. Berkeley, said. Researchers could measure dozens of variables and perform reams of analyses, then publish only the correlations that happened to appear “significant.” If you tortured the data long enough, as one grim joke went, it would confess to anything. They called such techniques “p-hacking.” As they later put it, “Everyone knew it was wrong, but they thought it was wrong the way it’s wrong to jaywalk.” In fact, they wrote, “it was wrong the way it’s wrong to rob a bank.”

The three men—who came to be called Data Colada, the name of their pun-friendly blog—had bonded over the false, ridiculous, and flashy findings that the field was capable of producing. The discipline of judgment and decision-making had made crucial, enduring contributions—the foundation laid by Kahneman and Tversky, for example—but the broader credibility of the behavioral sciences had been compromised by a perpetual-motion machine of one-weird-trick gimmickry. Their paper helped kick off what came to be known as the “replication crisis.” Soon, entire branches of supposedly reliable findings—on social priming (the idea that, say, just thinking about an old person makes you walk more slowly), power posing, and ego depletion—started to seem like castles in the air. (Cuddy, the H.B.S. professor, defended her work, later publishing a study that showed power posing had an effect on relevant “feelings.”) Some senior figures in the field were forced to consider the possibility that their contributions amounted to nothing.

In the course of its campaign to eradicate p-hacking, which was generally well intended, Data Colada also uncovered manipulations that were not. The psychologist Lawrence Sanna had conducted studies that literalized the metaphor of a “moral high ground,” determining that participants at higher altitudes were “more prosocial.” When Simonsohn looked into the data, he found that the numbers were not “compatible” with random sampling; they had clearly been subject to tampering. (Sanna, at the time, acknowledged “research errors.”) Simonsohn exposed similar curiosities in the work of the Flemish psychologist Dirk Smeesters. (Smeesters claimed that he engaged only in “massaging” data.) The two men’s careers came to an unceremonious end. Occasionally, these probes were simple: one of the first papers that Data Colada formally examined included reports of “-0.3” on a scale of zero to ten. Other efforts required more recondite statistical analysis. Behind these techniques, however, was a basic willingness to call bullshit. Some of the papers in social psychology and adjacent fields demonstrated effects that seemed, to anyone roughly familiar with the behavior of people, preposterous: when maids are prompted to think of their duties as exercise, do they really lose weight?

Kahneman graciously conceded that he had been wrong to endorse some of this research, and told me, of Data Colada, “They’re heroes of mine.”But not everyone was supportive. Data Colada’s harshest critics saw the young men as jealous upstarts who didn’t understand the soft artistry of the social sciences. Norbert Schwarz, an éminence grise of psychology, interrupted a presentation about questionable research practices at a conference, and later called the burgeoning reform movement a “witch hunt.” A former president of the Association for Psychological Science, in a leaked editorial, referred to such efforts as “methodological terrorism.” When Data Colada posted about Amy Cuddy, it was taken as evidence of borderline misogyny. The Harvard psychologist Daniel Gilbert referred to the “replication police” as “shameless little bullies”; others compared Data Colada to the Stasi. Simonsohn found this analogy hurtful and offensive. “We’re like data journalists,” he said. “All we can do is inform people with power. The only power you have is being right.”

Simmons, Nelson, and Simonsohn maintain a standing Zoom date once a week. Recently, they invited me to join. They’ve been working together long enough to finish one another’s sentences—the only real pleasure in what they do. Their work can be demoralizing, and after each successive fraud investigation they swear off the practice. “It’s pleasant for maybe an hour,” Simonsohn, who teaches at the Esade Business School, in Barcelona, told me. “You notice how they did it, and it feels great, like you wrote a mystery novel. But then everything feels bad.” Simmons, a professor at Wharton, added, “We have this unfortunate fraud detector in our brain. Obviously, it’s just an internal alarm and you have to then check, but you see results sections that stand out as ‘No no no, that’s not a thing.’ ” He remarked that Nelson had sent him a screenshot of a figure from a journal; with only a glance, Simmons would have bet his house that the data were fake, but the men didn’t plan to pursue the case. “At this point, it’s like an affliction,” Simmons said. “But if you see it you see it, and then it’s hard to look the other way.”

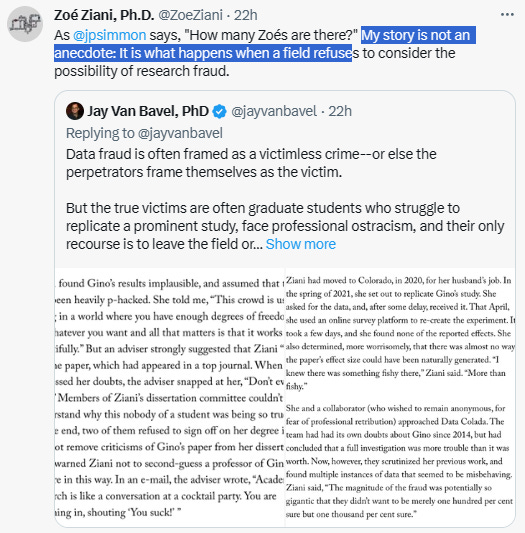

There is a propensity to write off such misconduct as a victimless crime. In the spring of 2021, Data Colada was contacted by Zoé Ziani, a recent Ph.D. recipient whose professional trajectory offered an example of the practice’s collateral damage. Ziani is slight and angular, but she projects considerable tensile strength. She grew up in a working-class neighborhood of Paris; her parents had not graduated from college, but she was enchanted by academia. “People were paid just to think and talk about how things work,” she told me. Assessing the fallout of the financial crisis, she found herself wondering how an entire industry could have developed a culture of malfeasance. “Nobody reacted before the worst happened,” she said. “Nobody raised their hands to say, ‘This is really risky, and we should stop.’ ”

In graduate school, Ziani took up the question of how individuals form and exploit professional networks—such as the ones she had to assemble from nothing. One recent high-profile contribution to the networking literature was a paper by Gino. Some participants had been asked to think of a time they had networked in an “instrumental” way, and then to fill in the blanks for prompts such as “W _ _ H,” “SH _ _ ER,” and “S _ _ P.” These people were more likely to complete the prompts with such cleaning-related words as “WASH,” “SHOWER,” and “SOAP”—in other words, networking made them feel literally unclean.

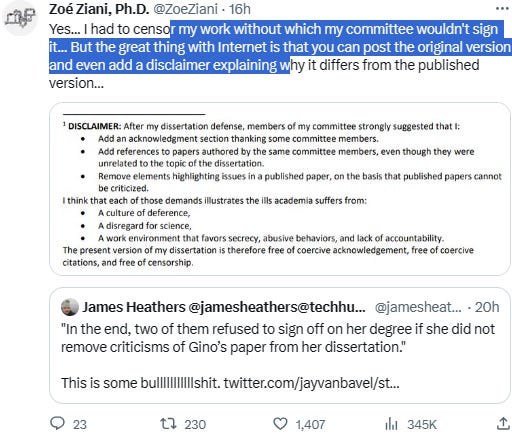

Ziani found Gino’s results implausible, and assumed that they had been heavily p-hacked. She told me, “This crowd is used to living in a world where you have enough degrees of freedom to do whatever you want and all that matters is that it works beautifully.” But an adviser strongly suggested that Ziani “build on” the paper, which had appeared in a top journal. When she expressed her doubts, the adviser snapped at her, “Don’t ever say that!” Members of Ziani’s dissertation committee couldn’t understand why this nobody of a student was being so truculent. In the end, two of them refused to sign off on her degree if she did not remove criticisms of Gino’s paper from her dissertation. One warned Ziani not to second-guess a professor of Gino’s stature in this way. In an e-mail, the adviser wrote, “Academic research is like a conversation at a cocktail party. You are storming in, shouting ‘You suck!’ ”

“You know what I like about them? You’d never guess they have kids!”

Cartoon by Carolita Johnson

Ziani complied, but her professional relationships had deteriorated, and she soon left the cocktail party for good. When she told me these stories, in a wood-panelled bar at a historic hotel in Boulder, Colorado, she covered her face to cry. Simmons told me that he could name countless people who had similar experiences. “Some people are hurt by this stuff and they don’t even know. They think they’re not good enough—‘It must be me’—so they leave the field,” he said. “That’s where I started to get angry. How many Zoés are there?”

Ziani had moved to Colorado, in 2020, for her husband’s job. In the spring of 2021, she set out to replicate Gino’s study. She asked for the data, and, after some delay, received it. That April, she used an online survey platform to re-create the experiment. It took a few days, and she found none of the reported effects. She also determined, more worrisomely, that there was almost no way the paper’s effect size could have been naturally generated. “I knew there was something fishy there,” Ziani said. “More than fishy.”

She and a collaborator (who wished to remain anonymous, for fear of professional retribution) approached Data Colada. The team had had its own doubts about Gino since 2014, but had concluded that a full investigation was more trouble than it was worth. Now, however, they scrutinized her previous work, and found multiple instances of data that seemed to be misbehaving. Ziani said, “The magnitude of the fraud was potentially so gigantic that they didn’t want to be merely one hundred per cent sure but one thousand per cent sure.”

One day, Ziani came across the field study from the car-insurance paper. This data was the fishiest of all, and she sent the file to Data Colada in triumph. On a Zoom call, Simonsohn looked more closely and realized, “Hey, wait a minute. This wasn’t Francesca?” The study had been conducted by Ariely. Later, they opened the file for Gino’s contribution to the same paper, and that, too, seemed incommensurable with real data. It was difficult not to read this as a sign of the field’s blight. Simmons told me, “We were, like, Holy shit, there are two different people independently faking data on the same paper. And it’s a paper about dishonesty.”

In 2021, the Data Colada team sent a dossier to Harvard that outlined an array of anomalies in four of Gino’s papers. In her lab study for the car-insurance paper, for example, several observations seemed to be out of order, in a way that suggested someone had moved them around by hand. Those data points, they found, were disproportionately responsible for the result. The team was unable to conceive of a benign explanation for this pattern. They had examined only four papers but noted “strong suspicions” about some of her published data going as far back as 2008. On October 27, 2021, Harvard notified Gino that she was under investigation, and asked her to turn over all “HBS-issued devices” by 5 p.m. that day. According to Gino, the police were called to oversee the process.

There had always been some bewilderment about Gino. Multiple people told me they found it abnormal that Gino so closely guarded her data at every step of the process. One former co-author said, “H.B.S. is so hierarchical, it’s like the military, and it was unheard of for the more senior person to do the bitch work and let the junior person have the lofty thoughts. But, then again, if I had done the grunt work, we would not have found significant results.” The networking paper that had originally drawn Ziani’s scrutiny had also seemed dubious to the former graduate student. “There were all kinds of red flags about her sample size, significance, and effect size, and I was, like, ‘No way, I’m done with this person,’ ” she said. Another professor I spoke to didn’t buy one of Gino’s early papers. “When you look at it, it just makes no sense,” he said. But, he added, “even in safe spaces in my world, to bring up that someone is a data fabricator—it’s, like, ‘Our friend John, do you think he might be a cannibal?’ ”

Concerns, however, had been raised. In 2012, Lakshmi Balachandra, now a professor at Babson, told Bazerman, Gino’s mentor, that her work seemed too good to be true. Balachandra said, “He basically said to me, ‘Oh, she’s such a hard worker, you could learn a lot from her.’ ” (Bazerman declined to comment about this on the record.) In 2015, a graduate student lodged a complaint against Gino and one of her colleagues, alleging, primarily, that Gino and the colleague created a tense and belittling environment. The more unsettling charge, though, was that Gino had repeatedly refused to share the raw data from their experiments. Once, after the student didn’t hand over an analysis during a long weekend, Gino ran the study herself and produced much stronger results. On multiple occasions, the student voiced concerns to a faculty review board that Gino was playing games with data, but the board was unresponsive. A three-month investigation concluded, in a confidential report, that none of the people involved had acquitted themselves particularly well, but that no action was warranted. “What incredibly low standards Harvard Business School must have to not take concerns about data manipulation seriously,” she wrote. (Harvard declined to comment on personnel matters.)

Gino has maintained that she never falsified or fabricated data. In a statement, her lawyer said, “Harvard’s complete and utter disregard for evidence, due process, confidentiality and gender equity should frighten all academic researchers. And Data Colada’s vicious take-down is baseless.” (She declined to comment on other matters on the record.) Lawrence Lessig, a law professor at Harvard, told me he is certain that Gino is innocent. “I’m convinced about her because I know her,” he said. “That’s the strongest reason why I can’t believe this has happened.”

This spring, Harvard finalized a twelve-hundred-page report that found Gino culpable. As part of its investigation, Harvard obtained the original data file for one of Gino’s studies from a former research assistant. An outside firm compared that to the published data and concluded that it had been altered not only in the ways Data Colada had predicted but in other ways as well. Gino’s defense, in that case, seems to be that the published data are in fact the real data, and that the “original” data are somehow not. Data Colada titled a blog post about her alleged misdeeds “Clusterfake.”

According to Gino, she was summoned to the office of the dean, who explained that she would be placed on administrative leave, and that he was instituting perhaps unprecedented proceedings to revoke her tenure. She wept. The dean told her, “You are a capable, smart woman. I am sure you’ll find other opportunities.” That day, journal editors were advised to begin the retraction process.

“If we see any of my bear friends, pretend I’m mauling you.”

Cartoon by Paul Noth

In September, NBC premièred a prime-time procedural called “The Irrational,” starring the “Law & Order” veteran Jesse L. Martin as a behavioral scientist, inspired by Ariely, who helps solve crimes. “Understanding human nature can be a superpower, which is why the F.B.I. ends up calling me,” Martin’s character says. A trade-publication article about the show accidentally described Ariely’s first book as a work of fiction, which inspired Richard Thaler to joke on Twitter, “I have known for years that Dan Ariely made stuff up but now it turns out that it is ok because his book was a novel!” Ariely has also just published a new book, “Misbelief.” In 2020, he writes, the Israeli government sought his help with pandemic-lockdown strategy. “covid in many ways was the highlight of my career,” he told me. He says that he suggested prompting people to wear masks through the altruistic message of “protect others.” He proposed an app-based solution to the rise in domestic violence: children, invited to imagine themselves as superheroes, were encouraged to report disturbances at home. That summer—in an anecdote left out of his book—he told the Israeli press he had suggested that the Army infect soldiers on a base with the coronavirus as an experiment. (Ariely said that his comments were taken out of context and that this was initially someone else’s idea. The former head of the Israel Defense Forces’ personnel directorate confirmed that he had a “very short” conversation with Ariely and “denied his request immediately.”)

He writes that he soon became the subject of Israeli covid-denialist conspiracy theories: in a “parallel universe,” he and his “Illuminati friends” were “in cahoots with Bill Gates” to collaborate “with multiple governments to control and manipulate their citizens.” He was called the “chief consciousness engineer” of the “covid-19 fraud.” One conspiracist posted a photo of Ariely’s burns, he wrote in a draft of the book, and said that his suffering had made him “want to take revenge on the world and kill as many people as possible.” Ariely describes late-night hours spent engaging with trolls online, offering, in one case, to provide his tax returns as proof that his government services were pro bono. The book thrums with a newfound pessimism; Ariely seems to have lost faith in his old parlor tricks. “It’s been a very, very tough few years being exposed to the darkest corners of the Internet and the darkest aspects of human nature,” he told me. It never seems to have occurred to him that the vanity and disdain behind a certain kind of social engineering—keeping the buffet treats just out of reach—might exacerbate ambient resentments.

It remained unclear, however, how potent those interventions had ever been. As the Data Colada team members learned more about the insurance paper, they found that it had long had an asterisk attached to it. In February, 2011, at the beginning of the collaboration, Ariely had sent an Excel file with the insurance company’s data to Nina Mazar, a frequent co-author, for analysis. She found that the results pointed in the wrong direction—people who had signed at the beginning were less honest. Ariely responded that, in making “the dataset nicer” for her, he had relabelled the condition names, accidentally switching them in the process. He instructed her to switch them back. (When asked recently, Ariely reiterated this account, though he added that someone in his lab might have retyped the condition names for him.)

Later, when Bazerman reviewed a draft, he was struck by something else. The over-all numbers suggested that people drove an average of twenty-four thousand miles a year, about twice what he would have expected. When asked about this, Ariely was vague: “We used an older population mostly in Florida—but we can’t tell how we got the data, who was the population (they were all AARP members)—and we also can’t show the forms.” This still seemed odd—why would retirees drive more than commuters? Work on the paper halted. Mazar eventually relayed that the mileage data might have reflected not one but multiple years of driving. Bazerman told me, “It was only then that I kept my name on the paper.”

Four years after publication, Bazerman received an e-mail from a guy named Stuart Baserman, who worked at an Internet insurance company, and had noticed the similar surname on the paper. (Bazerman’s wife suggested that he and Baserman take a DNA test. “Cousin Stu” is now Bazerman’s favorite cousin.) Baserman asked if the paper’s results would hold in an online setting. But several experimental attempts failed, as did a subsequent high-powered lab replication of Gino’s initial lab study. The effect just wasn’t there. (The Guatemalan government, with help from the “nudge unit” in the U.K., had also tried to replicate a version of Ariely’s field study with the insurance company, using tax forms, and found no results.)

Writing up the failed replication, one of the authors noticed something strange in Ariely’s field-study data: there was a large difference between the baseline mileage—the odometer readings taken prior to the study—of the two cohorts. This seemed like a fatal randomization error. The journal’s editors asked if the authors wanted to retract the original publication. Ariely and Gino were against the idea at the time. Ariely predicted that, if anything, it was the second paper that might have to be retracted. “My strong preference is to keep both papers out and let the science process do its job,” he wrote. He and Mazar were continuing to explore the value of honesty pledges. A former senior researcher at the lab told me, “He assured us that the effect was there, that this was a true thing, and I was convinced he completely believed it.”

It didn’t take long for Ziani and Data Colada to determine that the odometer readings were inauthentic. In real life, the distribution of how much people drive looks more or less like a bell curve. This data, however, formed a uniform distribution—the same number of people drove about a thousand miles as did twelve thousand miles as did fifty thousand. Most people, when asked to fill out a form, round off unwieldy numbers to the nearest hundred or thousand. But there were few round numbers in the data set. In a small additional kink, the data were written in two different fonts: Calibri and Cambria. In August, 2021, Data Colada detailed these issues in a blog post. The evidence was overwhelming, and all the paper’s authors agreed immediately that the data were bogus. In statements, each disowned any responsibility. Gino, unaware that she was also being investigated by Data Colada, praised the team for its determination and skill: “The work they do takes talent and courage and vastly improves our research field.” Ariely, apparently taken aback, underscored that he had been the only author who handled the data. He then seemed to imply that the findings could have been falsified only by someone at the insurance company.

The insurance company in question, which was revealed to be the Hartford, was surprised to find that it had anything to do with the now infamous study. According to an agreement that Ariely signed in 2007, he was not allowed to refer to any of the company’s data without permission—permission that, according to the company, he had neither sought nor received. (Ariely said that he would never share something without approval.) In the paper, the data had been attributed to a company in the “southeastern United States,” which now smacked of deliberate misdirection. “We have been based in Connecticut, and not the Southeast, for more than two hundred years,” a company spokesperson told me. The Hartford had, in fact, completed a small pilot study at Ariely’s request, but it hadn’t been fruitful: there was no discernible difference between those who signed at the top and those who signed at the end. The company never updated its forms, as Ariely had claimed. In May of 2008, about two months before Ariely discussed the results at Google, the Hartford sent him a single data set. During the next ten months, the company said, Ariely wrote at least five times to request additional odometer data, but it provided nothing. In February of 2009, all contact with Ariely ceased. (Ariely says that he has limited recollection of this time, and no paper trail.)

Recently, I obtained the original file of the insurance company’s data. It contains odometer readings for about six thousand cars. These readings are assigned to three different conditions. About half the people were given the company’s “original,” standard form, as a control. (This form couldn’t be tracked down.) On the experimental forms, which have the perfunctory look of a social scientist’s survey materials, a quarter of the participants were instructed to sign a prominent “Pledge of Honesty” at the end, and the remainder to sign one at the beginning. By the time Ariely sent the file to Mazar, three years later, the contents had been transformed. Now the file included about twenty thousand cars, in only two conditions. A comparison of the two files confirms that the data were put through the wringer. About half the cars in the “original” cohort were reassigned to the other conditions, but many appear to have been simply dropped. Among the remaining cars, many never made it to the new file at all, and around half of those that did had their conditions changed. Observations for at least fourteen thousand made-up cars were manufactured, presumably, as Data Colada conjectured, with the help of Excel’s random-number generator—the bulk of which appeared in a different font. According to an unpublished Data Colada analysis, six hundred and fifty of the odometer readings were manually swapped between conditions, which generated the study’s effect. But something went awry along the way, and it wasn’t until Mazar switched the condition labels that the experiment appeared to succeed. Although Ariely told Mazar that he had renamed the conditions, their names are unchanged between the two files. What, then, had he been doing when he was making “the dataset nicer” for her?

Ariely has consistently denied any role in the data manipulation. “I care about understanding what makes us tick, and I would never falsify any data on any experiment,” he told me. He disavowed any involvement in the “history” of the data, saying that he merely served as a conduit for the file; he claimed that his co-authors and the members of his lab also had access to it. Investigators of data fraud rarely have recourse to the equivalent of surveillance-camera footage, so the culprit’s identity may never be known with certainty. In September, 2021, the Hartford sent Ariely a cease-and-desist letter, warning him that, if he continued to suggest that “The Hartford had any role in the erroneous research published in the study,” it would pursue legal action. In the past two years, Ariely has nevertheless continued, privately in English and publicly in Hebrew, to implicate a nameless figure at the Hartford. As the former senior researcher told me, “What Dan says is that he thinks it was just some low-level employee who was doing someone a favor at the insurance company, but they don’t know who it was, and they can’t find out. ” This theory is possible; a crooked or inept employee might have taken the file, wangled it to serve Ariely’s hypothesis, and then re-sent it using a non-Hartford e-mail address. It would then have had to escape Ariely’s notice that six thousand observations across three conditions had become twenty thousand observations across only two. (Ariely said that this was the first time he had heard of the third condition, though it was mentioned in the Hartford’s cease-and-desist letter.) Recently, since Gino was put on leave, Ariely has privately speculated that she could have been responsible. He has also mused to colleagues that a lab member could have made the changes. He told Data Colada, however, that he had been the only one to handle the data, and it would have been an enormous risk for a junior researcher to take. (Ariely said that he would never accuse anyone without evidence.) The metadata for the Excel file that he sent to Mazar note that it was created, and last edited, by a user named Dan Ariely.

“You can just leave it on that crag, thanks.”

Cartoon by Lila Ash

p

Ariely, with his vaudevillian flair and commitment to provocation, had never been a perfect fit for the academy. Throughout his career, he performed studies that no one else would have had the courage, or the recklessness, to pursue. One study put survey questions to subjects who were actively masturbating. (Ariely found that men, in a state of excitement, could imagine being aroused by a twelve-year-old girl, animals, and shoes.) Another looked into people’s attitudes about dildos and other sex toys. He once proposed outfitting service workers with protuberant fake nipples to see how the devices would affect tips.

In 2005, Ariely ran an experiment at M.I.T. in which electric shocks were administered to Craigslist volunteers, who had been told that they were testing the efficacy of a painkiller. One of the participants was subjected to more than forty shocks of increasing strength, and broke down in tears. She claims that an assistant in a lab coat told her that she would forfeit payment if she backed out. (The assistant doesn’t recall saying this.) The worst part was the final dehoaxing: in notes from the time, she wrote, “I was informed that there was no pain killer; that they were testing placebos and that all the information that I had been given was fabricated.”